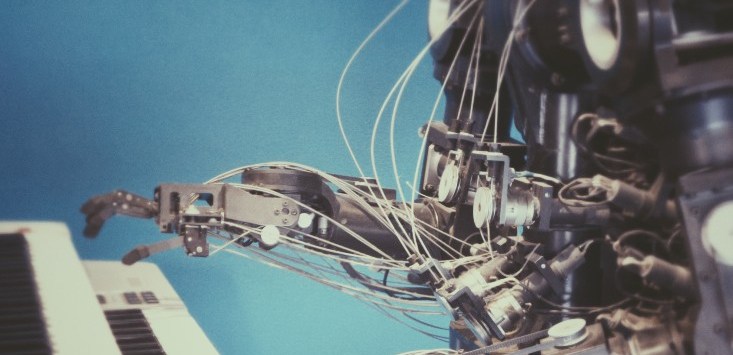

Source: Unsplash/Possessed Photography

Have you heard of the new natural language processing bot ChatGPT? This bot will generate content on virtually any topic you give it, within seconds, in the form of poetry, essays, you name it. The intuitive interface and the clarity of the responses are remarkable. And while much of the commentary around ChatGPT has questioned the ethics of this technology, I predict that if businesses don’t embrace language processing bots, they will be left behind.

I co-created the world’s first AI-enabled privacy chatbot a few years ago, ‘Parker’. The thing that was most amazing was how fast and how much Parker learnt just by people using it — so-called reinforcement learning. Once people have been using ChatGPT for a while, the solutions will become even better — more precise, more personalised.

Here’s where I predict ChatGPT will be used within the next 12 months:

Creating marketing content: Anyone creating content for marketing will face significant challenges from this tech as the content it creates is not bad. In a relatively short space of time, it will rise up the chain from generic brochure material to more sophisticated content, reports and lead generation.

Customer service: The natural language processing power of ChatGPT is a step ahead of what we experience at present with chatbots. Currently, the customer experience with chatbots is a bit hit-and-miss. The experience will become much better, more human-like very soon.

Research: Basic research can be done incredibly quickly by AI, even if the accuracy is not perfect. AI will start to be more commonly used for basic analysis to augment human analysis.

Writing code: The current generation of AI does a reasonable job of writing code for basic functions as well as doing debugging tasks. Given code writing is a very rule/logic-based process, one can assume the opportunities here are strong.

Legal services: I think ChatGPT has the potential to do significant amounts of basic research tasks and routine work. However, my experience as co-founder of online legal services platform LawPath shows me that people seeking legal assistance are okay with a DIY solution up to a point, but they almost always want a human lawyer to give their stamp of approval to the advice. Legal advice is about judgement at the end of the day and, at least at present, AI doesn’t have judgment.

This tech is intuitive and there are great opportunities for people who are able to use it to make them better at what they do and the services they offer their customers/stakeholders.

But I do have concerns and safety is a big one. Some of the great minds like Stephen Hawking and Bill Gates have expressed concern about whether AI poses a threat to humanity. There is something to this and so there is a need for global agreements to restrict the weaponising of AI.

We also know bias has been an issue with AIs that have been used in recruiting and other HR processes. There needs to be awareness of this issue, transparency and accountability for how AIs are used.

Lastly, there are concerns about the misuse of AI in education. I think that all levels of education are going to have to rethink the assignment-testing model. I don’t think essay writing will be a viable way to test students in the future unless it is done under conditions where one can be certain they are not able to access AI.

Nick Abrahams is the co-creator of AI-enabled privacy chatbot Parker, an entrepreneur, lawyer, author and ASX non-executive director.

Handpicked for you

Five reasons why you shouldn’t rely on ChatGPT to write business copy

COMMENTS

SmartCompany is committed to hosting lively discussions. Help us keep the conversation useful, interesting and welcoming. We aim to publish comments quickly in the interest of promoting robust conversation, but we’re a small team and we deploy filters to protect against legal risk. Occasionally your comment may be held up while it is being reviewed, but we’re working as fast as we can to keep the conversation rolling.

The SmartCompany comment section is members-only content. Please subscribe to leave a comment.

The SmartCompany comment section is members-only content. Please login to leave a comment.