Actor, director, model, teen vampire movie star – now Kristen Stewart can add research author to her list of credentials. Stewart, best known for her portrayal of Bella Swan in the Twilight Saga films, has co-written an article published in Cornell University’s online library arXiv.

It explains the artificial intelligence technique used in her new short film, Come Swim, that enables footage to take on the visual appearance of a painting.

Many popular software programs, such as Adobe Photoshop and GIMP, already provide filters that can make photographs take on the general style of oil paintings, pen sketches, screen-prints or chalk drawings, for example.

The algorithms needed to accomplish this first began to produce aesthetically pleasing results in the early 1990s.

The techniques that Stewart and her colleagues examine in their article take this idea much further. The new methods they employed enable photographs or video sequences to take on not just a general style but also the look of a specific painting.

This could be as abstract or impressionistic as desired, enabling the film-maker to take inspiration from anyone from Pollock to Picasso. The technique is known as style transfer.

Come Swim was reportedly inspired by a painting by Stewart, which seems to have provided the impetus for the project that the article describes.

The short film uses the style transfer technique to generate dream-like sequences for the opening and closing scenes. The paper, co-written with producer David Ethan Shapiro (Starlight Studios) and visual effects artist and research engineer Bhautik Joshi (Adobe), describes the experience of creating these sequences.

Twilight star, @KristenStewart, has coauthored a paper on #AI technology used in her directorial debut “Come Swim”. https://t.co/1WjVOFM4C5 pic.twitter.com/mo7n1wL32v

— WVUMediaInno (@WVUMediaInno) February 1, 2017

The article is relatively brief, at just three pages including references. It doesn’t provide rigorous testing, or give a thorough explanation of the algorithms that underpin the software used.

Instead, it is more of a case study that gives an anecdotal account of the filmmakers’ experiences and how they fine-tuned the style transfer system.

It highlights the progress that has recently been made in using artificial intelligence software to apply style transfer techniques to video sequences, making it possible to use them in professional film-making.

Movie frames, along with the painting to be imitated (known as the style image), are fed into what’s known as a convolutional neural network. This is an artificial intelligence system that is inspired, both in what it does and how it is structured, by the way that neurons are interconnected in the visual cortex of the brain.

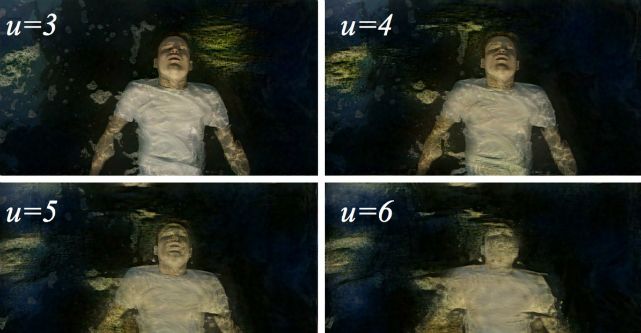

The neural network was trained to process the movie frames using the example of Stewart’s painting (the style image) to generate the stylised footage.

The software parameters were manipulated to process the images quickly with minimal computing power to produce footage that was “in service of the story”. The parameters experimented with included colour and texture, the source image’s resolution, the number of iterations the algorithm ran for, and the style transfer ratio (the degree to which the style image was imitated).

Smoother sequences

Until recently, style transfer in video sequence has been difficult because each frame would be converted into an individual image that didn’t necessarily look like the others in the sequence.

This meant when processed frames were combined into a video it produced jarring changes in the image on screen, compromising the aesthetic effect.

These limitations have now been overcome to some degree by software that can smoothly blend between two fixed images, enabling smooth style-transferred video sequences to be created.

These sequences can even be generated in real time and using multiple style images (for example several paintings) to produces videos that are a blended pastiche of numerous visual styles.

These exciting developments will enable video-based art installations, short films and perhaps feature-length films to be manipulated to adopt a particular visual style.

This means entire films could be given the impression of an animated painting or another source that the production team wishes to imitate. Stewart and her colleagues have made use of pioneering new production techniques that open the door to an infinite number of imaginative visual styles.

Ian van der Linde is a Reader in the Department of Computing and Technology and Vision and Eye Research at Anglia Ruskin University.

This article was originally published on The Conversation. Read the original article.

Follow StartupSmart on Facebook, Twitter, LinkedIn and iTunes.

COMMENTS

SmartCompany is committed to hosting lively discussions. Help us keep the conversation useful, interesting and welcoming. We aim to publish comments quickly in the interest of promoting robust conversation, but we’re a small team and we deploy filters to protect against legal risk. Occasionally your comment may be held up while it is being reviewed, but we’re working as fast as we can to keep the conversation rolling.

The SmartCompany comment section is members-only content. Please subscribe to leave a comment.

The SmartCompany comment section is members-only content. Please login to leave a comment.