Source: SMartCompany

LinkedIn has started rolling out a new generative AI feature to posts on the platform. Not everyone has it yet, and details around transparency, legitimate information sharing, and using posts to train AI have been light.

LinkedIn first announced its intentions to offer generative AI posts a few months ago. It’s also not the first generative AI tool to be released by the organisation. It already offers this for ‘Collaborative Posts’ as well as job, advertising, and profile description writing.

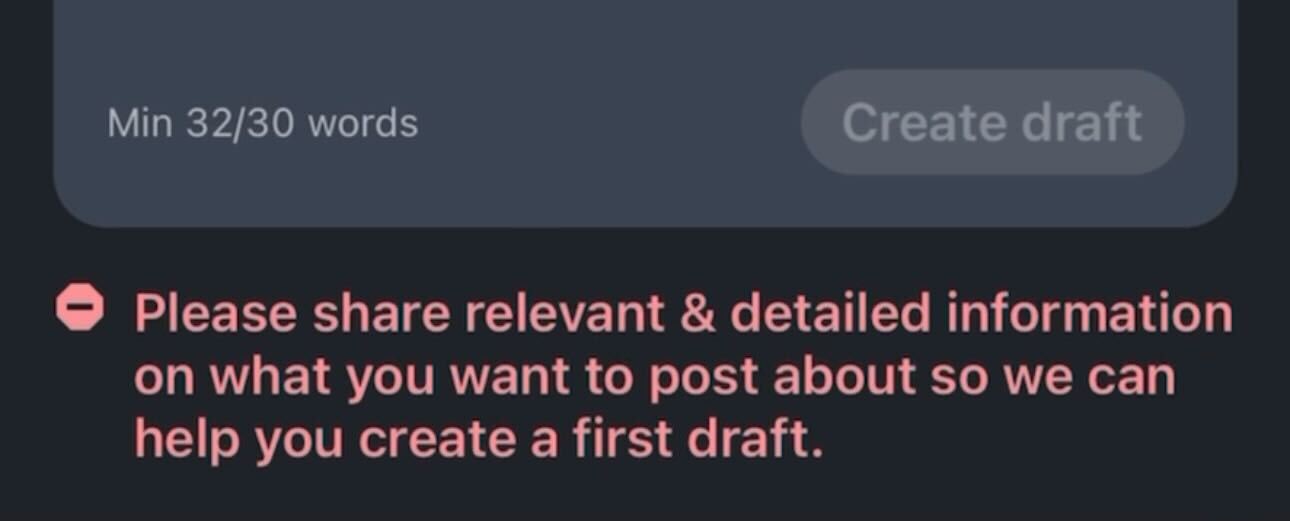

When creating a fresh post, this tool gives users the option to create a ‘draft’ with the use of AI. It asks users for a detailed explanation of what they want the post to say, including examples.

If the AI deems there isn’t enough detail, users are asked to essentially try again:

Image: Steph Clarke

Users have begun reporting gaining access to this feature over the past week. At the present time, it’s unclear how these users have been targeted, such as region or account type.

For example, this author has seen posts from users in different areas of Australia with access to the feature but does not personally have access.

“When it comes to posting on LinkedIn, we’ve heard that you generally know what you want to say, but going from a great idea to a full-fledged post can be challenging and time-consuming. So, we’re starting to test a way for members to use generative AI directly within the LinkedIn share box.,” Keren Baruch, Director of Product at LinkedIn, wrote in a blog post announcing the new feature.

“Responsible AI is a foundational part of this process so we’ll be moving thoughtfully to test this experience before rolling it out to all our members.”

LinkedIn wouldn’t answer questions around this generative AI feature

This thoughtful approach to AI unfortunately did not extend to questions about the rollout. The organisation declined to answer SmartCompany’s questions and pointed instead to previous blog posts it had published regarding AI.

This included its responsible AI principles, its approach to detecting AI-generated profile photos, how it uses AI to protect member data, and a post on how it applied its responsible AI principles in practice.

Some of our questions included potential plans to flag posts that had been written by AI for transparency, measures being put in place to ensure post accuracy and not help spread misinformation, and whether these posts will be used to train the large language model (LLM) that LinkedIn’s AI is powered by.

On that, it’s been previously reported that LinkedIn has used both GPT-4 and GPT-3.5 to build its various AI-powered writing suggestions.

LinkedIn has also specifically mentioned Microsoft’s leadership when it comes to the platform’s Responsible AI Principles, which makes sense since the tech giant owns the platform. Some of these principles include providing transparency, upholding trust, and embracing accountability.

Addressing concerns around responsible AI

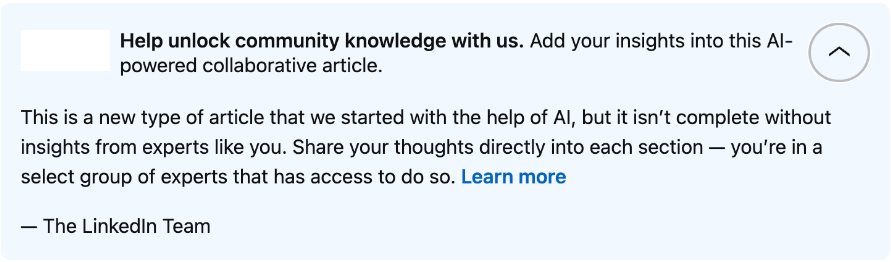

It’s worth noting that the Our Responsible AI Principles in Practice post does address some concerns around the use of AI on the platform.

For example, it showed that when AI has been used to help create Collaborative Articles, job descriptions, and profile descriptions the user is made aware of the use of AI to generate this.

Image: LinkedIn

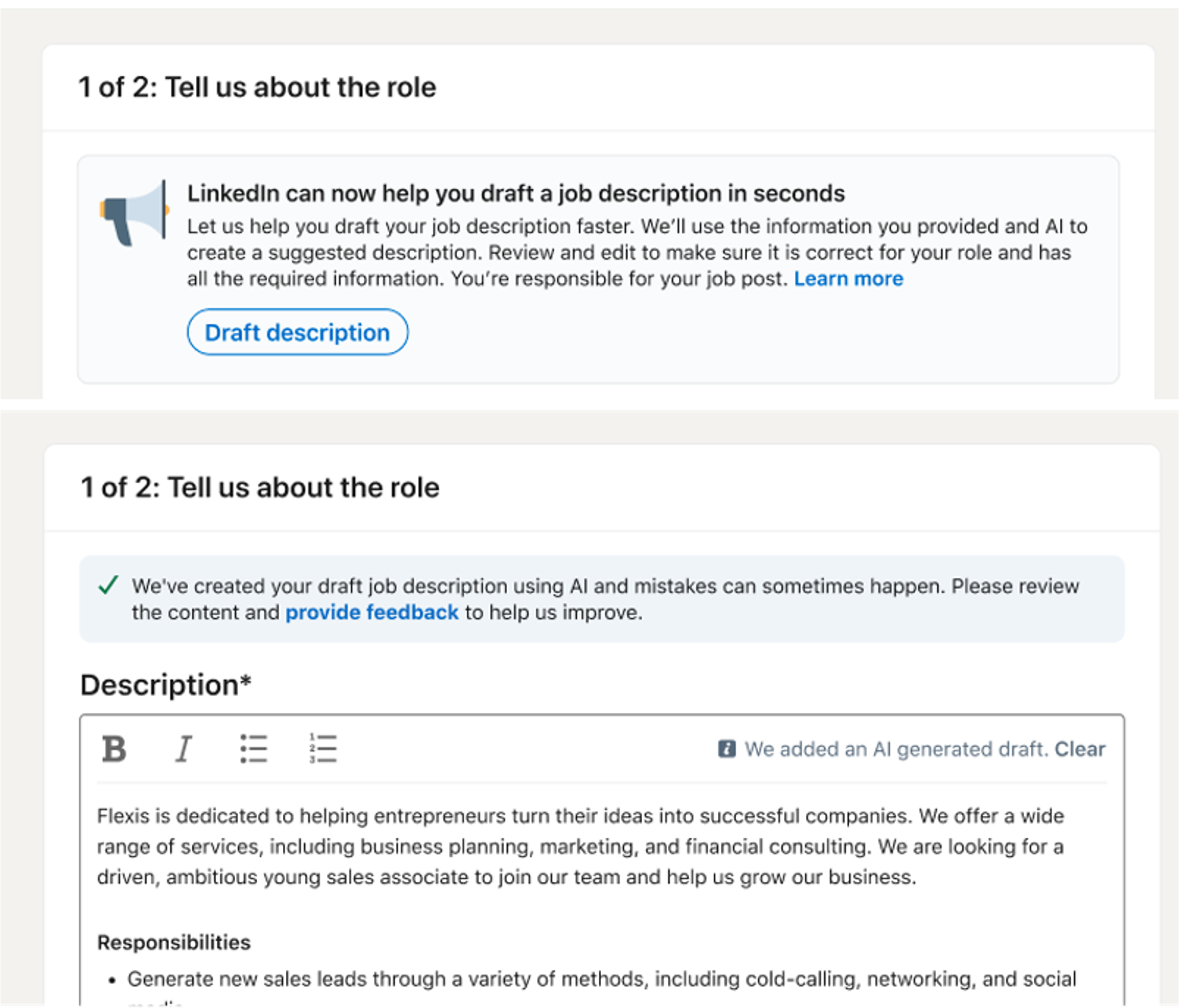

However, the language is quite specific here and it’s unclear whether this same transparency is afforded to readers of this content. For example, other LinkedIn members will also see a box explaining that a job, article, or profile has been written with LinkedIn’s internal AI.

Image: LinkedIn

Similarly, this specific blog post went into detail about trust, accountability and safety. The major focus of this was around AI governance and the checks, balances and potential biases LinkedIn goes through with its use of AI. And that’s fantastic. These are integral things that any organisation utilising generative AI needs to be doing.

But it still does not answer the question around public accountability and transparency once generative AI is publicly facing on the platform.

And this is a shame. When LinkedIn is making a big deal about transparency with generative AI, why is it not clear whether the readers, as well as the creators, will be made aware of when these tools have been implemented?

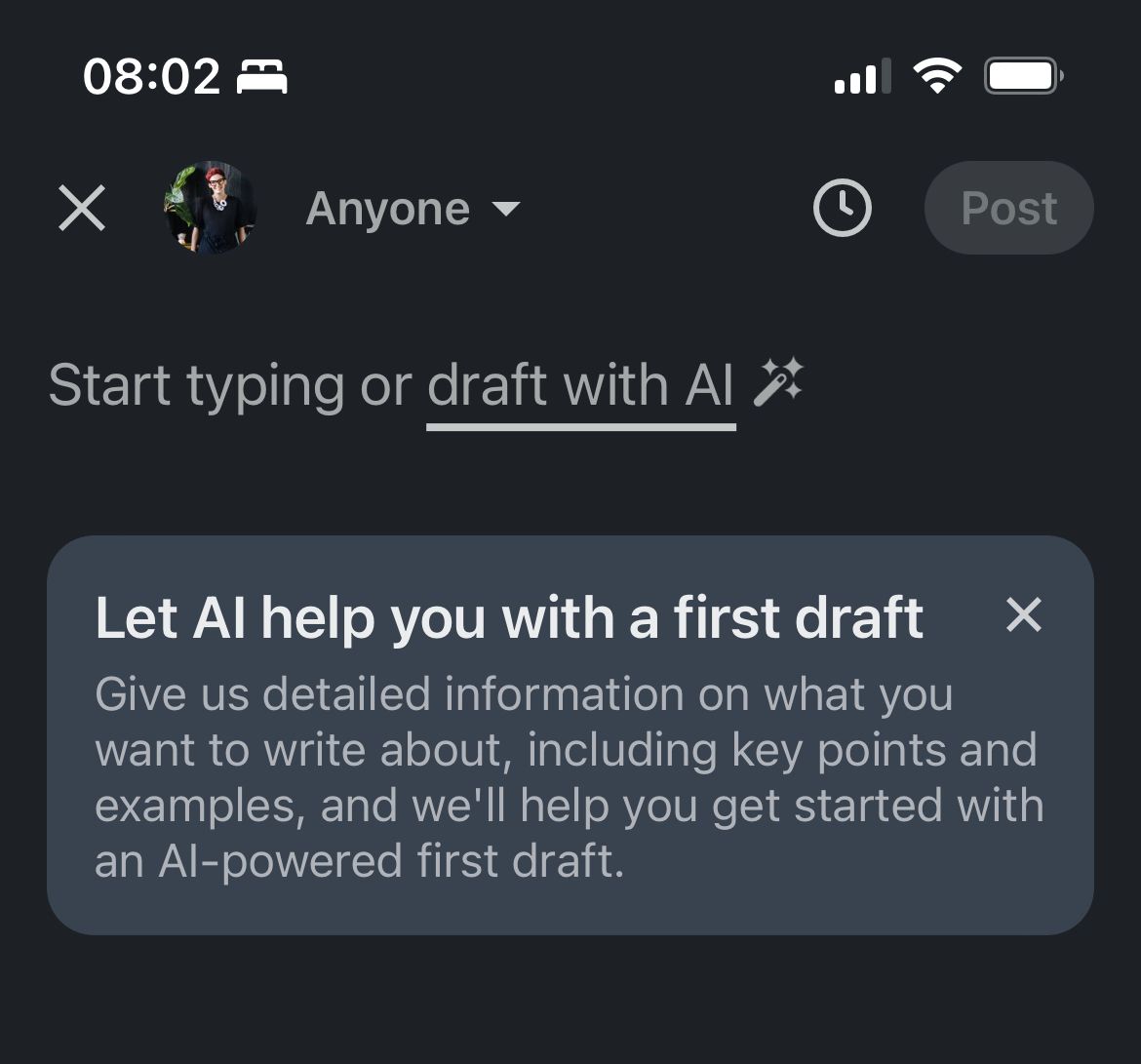

Image: Steph Clarke

“At a time when LinkedIn is making algorithm adjustments in an attempt to elevate conversations on the platform, the decision to build in a native tool that creates more generic content seems like an unusual decision,” Steph Clarke, a Melbourne Futurist, said to SmartCompany.

Clarke also happens to be one of the LinkedIn users who now have access to its generative AI feature.

“There’s an increased call for more transparency in how and where AI is used. Atlassian called for a traffic light system just last week, and New York requires organisations to declare, and audit, AI use in recruitment decisions.

LinkedIn has potentially missed an opportunity to lead the way on this transparency in the social media space when it built this AI post generator into the platform.”

Being the devil’s advocate

While AI is certainly not new, it is still in its infancy when it comes to public use (particularly generative AI) and the necessary regulations and responsibilities that come with that.

There is a great deal of warranted fear to be had around jobs losses, potential bias, the spread of misinformation and harmful content generation.

And it’s because of this that people are calling for that extra layer of transparency when it comes to AI generated content. And I’m one of them.

But I was challenged by one perspective on Clarke’s post about LinkedIn generative AI rollout. The commenter pointed out that the use of ghost writers has been common practice for years — from books to even blog and social media posts — particularly in the business world.

And that’s a damn good point.

Copywriters, PR professionals, and even interns have been penning words for CEOs since forever. Most professionals know this, and yet disclosure has never been an expectation. And yet many of us are demanding it now with the introduction of widely available generative AI.

Of course, there are other considerations when it comes to humans versus robots. AI hallucinations and incorrect ‘facts’ are still real problems, for example.

But I’d be lying if I didn’t admit this gave me pause, like so many things in the greater AI debate do.

COMMENTS

SmartCompany is committed to hosting lively discussions. Help us keep the conversation useful, interesting and welcoming. We aim to publish comments quickly in the interest of promoting robust conversation, but we’re a small team and we deploy filters to protect against legal risk. Occasionally your comment may be held up while it is being reviewed, but we’re working as fast as we can to keep the conversation rolling.

The SmartCompany comment section is members-only content. Please subscribe to leave a comment.

The SmartCompany comment section is members-only content. Please login to leave a comment.