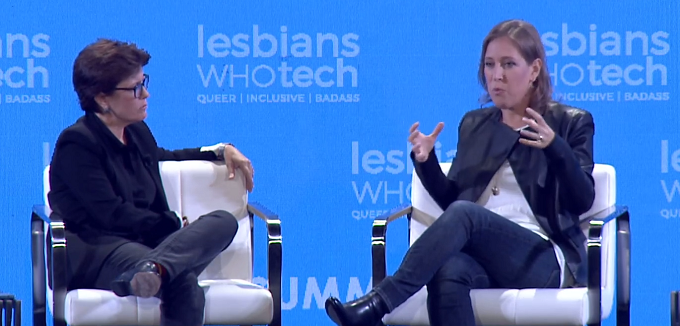

YouTube chief executive Susan Wojcicki addressing a pedophile scandal over the weekend. Source: YouTube.

YouTube’s chief executive Susan Wojcicki has defended the platform’s efforts to curb violent and inappropriate content in a testing interview about the future of the video-hosting website.

Grilled by New York Times columnist Kara Swisher at a tech summit in San Fransisco over the weekend, Wojcicki addressed allegations a network of pedophiles were using YouTube to communicate.

The revelations, which first surfaced last month, have prompted Australian supermarket giants Coles and Woolworths to suspend advertising on the platform, alongside American brands Nestle and AT&T.

It’s not the first time brands have vacated Google-owned YouTube either. Back in 2017, dozens of businesses suspended their marketing spend after it emerged their ads were being served on hate-speech videos promoting terrorism.

“We became aware of some comments, the videos were okay, but the comments with those videos … as soon as we were made aware of them (the number was in the low thousands) we removed comments off of tens of millions of videos,” Wojcicki said of the pedophile scandal.

YouTube was forced to shut down over 400 channels and disable comments on tens of millions of videos last week amid the pedophile reports, changing its policy to prevent comments on all videos featuring young minors.

Big brands haven’t taken to the latest scandal kindly. A spokesperson for Coles confirmed it had halted YouTube advertising, while a Woolworths spokesperson said ad spend was paused.

“We’ll continue to monitor the situation closely with our agency partners and Google,” the spokesperson said.

In the third quarter of last year, YouTube removed almost eight million videos, 75% of which were taken down by machines, the majority of which had no views.

Asked why she implied the platform was not aware of the problem, Wojcicki explained YouTube has been working on child safety for several years.

“I’m a mom, I actually have five children from 4 to 19, so I understand kids, and at least as a parent, I understand it and really want to do the right thing,” she said.

Despite the investment, exploitative content aimed at children has still made its way onto the platform.

Last week the Washington Post revealed suicide tips were being spliced into videos on the YouTube Kids platform, an area of the website touted as a safe space for minors.

Wojcicki said YouTube was monitoring instances of exploitative and dangerous behaviour on its platform.

“We are monitoring it, but you have to understand the volume we have is quite substantial,” Wojcicki said.

“You have all reaped the benefit, but not the responsibility,” Swisher responded, steering the conversation to YouTube’s broader role as a major content distribution channel.

The role multi-national tech companies such as Google, Facebook and Twitter play in propagating illegal content has forced a Silicon Valley scramble for better content moderation.

Facebook has established so-called ‘war rooms’ of moderators to monitor the spread of intentionally incendiary and fake information, while Google’s YouTube has invested in more robust automatic detection software.

YouTube committed to having 10,000 human content moderators by the end of last year but still uses automated processes to scan the 500 hours of video uploaded to the website every minute.

“There’s more progress to be made, but in the last year have built very significant teams to be able to address this,” Wojcicki said.

“We have to use humans and machines to fix this problem,” she said.

NOW READ: YouTube Red launches in Australia: Why it breaks the video-on-demand mould

NOW READ: Video made the YouTube star: ATO crackdown on YouTube businesses

COMMENTS

SmartCompany is committed to hosting lively discussions. Help us keep the conversation useful, interesting and welcoming. We aim to publish comments quickly in the interest of promoting robust conversation, but we’re a small team and we deploy filters to protect against legal risk. Occasionally your comment may be held up while it is being reviewed, but we’re working as fast as we can to keep the conversation rolling.

The SmartCompany comment section is members-only content. Please subscribe to leave a comment.

The SmartCompany comment section is members-only content. Please login to leave a comment.